Import vs Direct Query Power BI: Comprehensive Guide

As you start working with Power BI, you’ll encounter an important decision: How do I connect to data in my reports, and what is the difference between Import vs Direct Query Power BI? Then you google for insights and find a few “technical consultant” focused blogs, that discuss significant differences thing sentences, and we wanted to make a comprehensive article for more audience members.

Your chosen connection method will depend on the source database and your analytics needs. Once connected, you can visualize and analyze the data in your reports using Power BI’s interactive dashboard. That’s where “Import” and “Direct Query” come into play. But what does Import vs Direct Query Power BI mean?

Both allow you to uncover hidden opportunities using data. Data governance for PowerBI is essential for operationalizing how data is refreshed in analytics projects. This ensures that the dashboard meets the organization’s analytics needs and takes advantage of the benefits of data governance. This means you’re not guessing between the directory method (aka live) or Import (aka extract) between each report because it’s an established offering for analytics projects. It’s advantageous for your analytics needs. Teams often set a few data freshness and time analytics options and then apply those limitations to all incoming reports. This ensures the data access credentials are up-to-date, providing a snapshot of the most recent information.

Introduction to Connecting to Data in Powerbi

You may also encounter this situation when you realize that the DirectQuery feature doesn’t work with your underlying data source or that the Import feature doesn’t update fast enough. You may wonder if you need to rebuild your data models.

The decision to use analytics extends beyond databases and includes various data sources such as online services, spreadsheets, APIs, and more.

In Power BI, users can choose the direct query method for their analytics needs. This choice becomes noticeable as they set up data connections and build their dashboards in Power BI.

You are choosing between Import Vs. Direct Query in Power BI, at first, is easy to skip without considering its long-term impact or the implications it may have as your prototype dashboard goes from DEV to PROD. When working with Direct Query to utilize data sets effectively, it is essential to understand the data connectivity and the underlying data source.

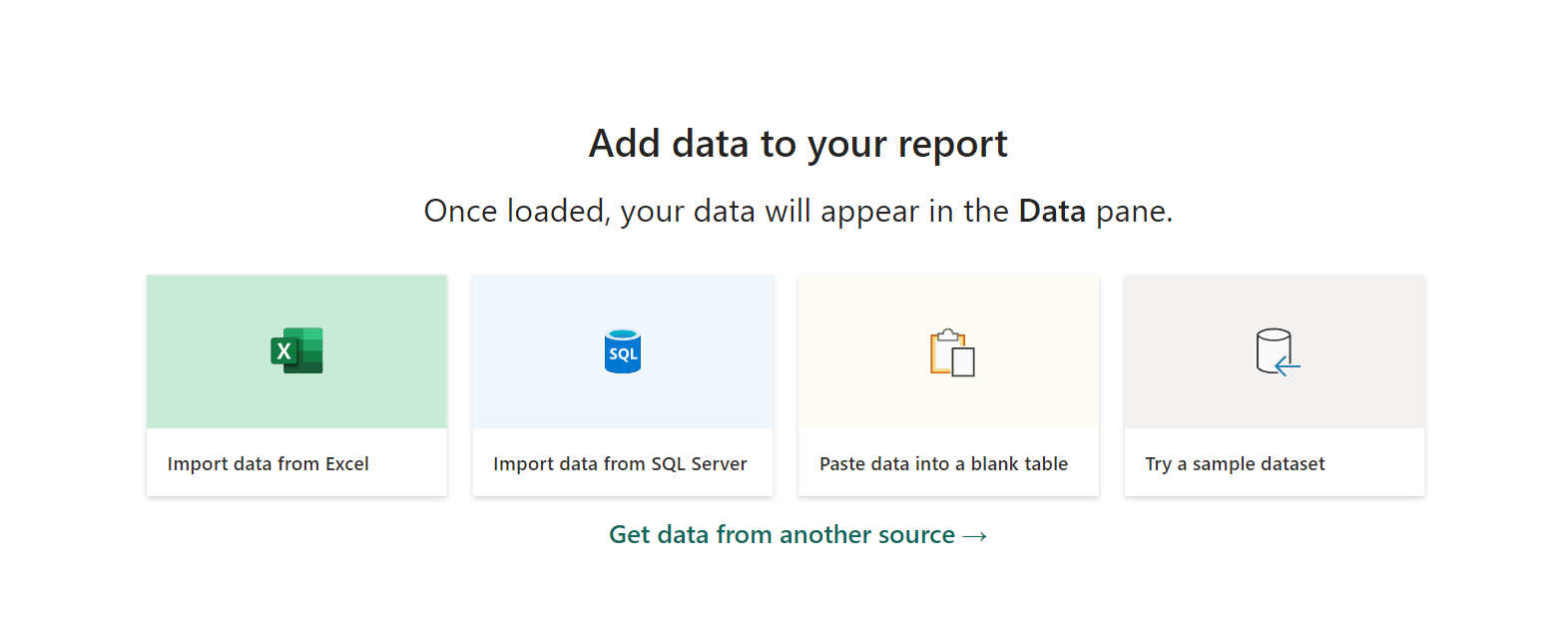

The first time you see the comparison between “Import Vs. Direct Query”

The first time, in Power BI, is while connecting to data.

Suppose you’re using a relational database like Microsoft SQL Server. In that case, you can import data into Power BI using Import Mode or connect directly to the database using Direct Query Mode for analytics.

As we researched, we found many technical blogs written to help people explain the tech technical aspects and insights using Power BI Service and Power BI Desktop. Still, we didn’t find direct content that explained it in a way we could easily share with business, sales, marketing teams, or executives using Power BI service and Power BI desktop. Ideally, this comprehensive guide will help explain to technical and non-technical users, as both should know about the process from multiple perspectives because it presents the overall availability of the data with both ups and downsides.

Consider Import and Direct Query as two different roads or paths leading to the same destination.

Insights in the Power BI service. Each road or path, including direct query, has advantages and considerations; we’ll help you navigate them. Whether you’re just starting your Power BI journey or looking to make more informed choices about data connections, this direct query may become your friendly companion.

Import Mode in Power BI is like bringing all your data into Power BI using DirectQuery. It’s fast, flexible, and lets you create powerful visualizations. With a direct query, you can work on your data even when offline, just like playing with building blocks.

On the other hand, Direct Query Mode is more like having a direct line to your data source with direct query. DirectQuery is a real-time feature in Power BI that doesn’t store your data inside the platform. It’s as if you’re looking at a live feed.

Selecting between Import or Direct Query involves critical decisions, like choosing between different game modes.

What is Import Data Mode?

The Import Data Mode in Power BI is like bringing all your data into Power BI’s playground using DirectQuery. Here’s a closer look:

The most common method used in Power BI is the DirectQuery Import Data Mode. In this direct query mode, you directly pull data from various sources—such as databases, spreadsheets, online services, and more—into Power BI.

This is extract in Tableau Desktop.

Power BI’s internal engine copies and stores the data using a direct query. Think of it as filling your toy box with all your favorite toys, including direct queries, making them readily available whenever you want to play.

This approach offers several key benefits:

Benefits of Import Data Mode

- Speed: Since the data is stored within Power BI’s direct query functionality, it can be processed and analyzed quickly. With DirectQuery, your reports and visualizations using DirectQuery respond almost instantly, providing a smooth user experience.

- Offline Access: With DirectQuery, you can work on your reports in Import Mode without an internet connection. It’s like having direct toys wherever you go without accessing the original data source.

- Data Transformation and Modeling: In Import Mode, direct query gives you complete control over your data. To build a coherent and insightful dataset, you can shape, clean, and create relationships between tables with direct queries. This natural flexibility is like being the master of your toy kingdom, arranging everything just how you want.

How to Import Data in Power BI

Importing data into Power BI is straightforward:

- Data Source Selection: First, you choose the direct data source you want to import from. This could be an SQL database, an Excel spreadsheet, a cloud service like Azure or Google Analytics, or many others that support direct queries.

- Data Transformation: You can perform data transformations using Power Query, a powerful tool built into Power BI. This step allows you to clean, reshape, and prepare your data for analysis.

- Data Modeling: In this phase, you create relationships between tables, define measures, and design your data model. It’s like assembling your toys in a way that they interact and tell a meaningful story.

- Loading Data: Finally, you load the transformed and modeled data into Power BI. This data is ready to build reports, dashboards, and visualizations.

Data Transformation and Modeling

Data transformation and modeling are critical steps in Import Mode:

- Data Transformation: Power Query allows you to perform various transformations on your data. You can filter out unnecessary information, merge data from multiple sources, handle missing values, and more. This is like customizing your toys to fit perfectly in your playtime scenario.

- Data Modeling: In Power BI’s Data View, you define relationships between tables. These relationships enable you to create meaningful visuals. It’s similar to connecting different parts of your toys to create an exciting and cohesive storyline.

Performance Considerations

While Import Mode offers many advantages, it’s essential to consider performance factors:

- Data Refresh: As your data evolves, you must regularly refresh it to keep your reports current. The frequency and duration of data refresh can impact the overall performance of your Power BI solution.

- Data Volume: Large datasets can consume a significant amount of memory. Monitoring and optimizing your data model is essential to ensure it doesn’t become unwieldy.

- Data Source Connectivity: The performance of data import depends on the speed and reliability of your data source. Slow data sources can lead to delays in report generation.

- Data Compression: Power BI uses compression techniques to reduce the size of imported data. Understanding how this compression works can help you manage performance effectively.

What is Direct Query Mode?

Direct Query Mode in Power BI is like allowing an executive to see data when it’s in the database. They are running a query on that database when they start the report. This is great for dashboards that only have a few users or if the database is optimized for traffic, you can increase the traffic. However, as a rule of thumb, it’s best to keep direct queries for those who need to access data immediately and try to use Import for everything else.

This usual question of “when was this refreshed?” will have the exciting answer of “when you opened the report.”

This is called “Live” in Tableau Desktop.

In Direct Query Mode, you establish a direct connection from Power BI to your data source, such as a database, an online service, or other data repositories. Instead of importing and storing the data within Power BI, it remains where it is. Imagine it as if you’re watching your favorite TV show as it’s being broadcast without recording it. This means you’re always viewing the most up-to-date information, which can be crucial for scenarios where real-time data is essential.

Benefits of Direct Query Mode

- Real-time or Near-real-time Data: Direct Query provides access to the latest data in your source system. This is invaluable when monitoring rapidly changing information, such as stock prices, customer interactions, or sensor data.

- Data Source Consistency: Data isn’t duplicated in Power BI; maintain consistency with the source system. Any changes in the source data are reflected in your reports, eliminating the risk of using outdated information.

- Resource Efficiency: Direct Query Mode doesn’t consume as much memory as Import Mode since it doesn’t store data internally. This can be advantageous when dealing with large datasets or resource-constrained environments.

Supported Data Sources

Power BI’s Direct Query Mode supports a variety of data sources, including:

- Relational Databases: This includes popular databases like Microsoft SQL Server, Oracle, MySQL, and PostgreSQL, among others.

- Online Services: You can connect to cloud-based services like Azure SQL Database, Google BigQuery, and Amazon Redshift.

- On-premises Data: Direct Query can also access data stored on your organization’s servers, provided a network connection.

- Custom Data Connectors: Power BI offers custom connectors that allow you to connect to various data sources, even those not natively supported.

Creating a Direct Query Connection

Setting up a Direct Query connection involves a few steps:

- Data Source Configuration: Start by defining the connection details to your data source, such as server address, credentials, and database information.

- Query Building: Once connected, you can create queries using Power BI’s query editor to specify which data you want to retrieve. Think of this as choosing the TV channel you want to watch.

- Modeling and Visualization: As with Import Mode, you’ll need to design your data model and create visualizations in Power BI, but with Direct Query, the data stays in its original location.

Performance Considerations

While Direct Query offers real-time data access, there are some performance considerations to keep in mind:

- Data Source Performance: The speed of your Direct Query connection depends on the performance of your data source. (Your dashboard calculations and complexity are equally crucial for performance, but this is the distance between data source and the dashboards). Slow or poorly optimized databases can delay retrieving data, but that’s dashboard-level performance and not data source performance. Both are significant, and both are different.

- Query Optimization: Efficiently written queries can significantly improve performance. Power BI’s query editor provides tools to help you optimize your queries.

- Data Volume: Large datasets may still impact performance, especially when complex calculations are involved. Efficient data modeling is essential to mitigate this.

- Data Source Compatibility: Not all data sources are compatible with Direct Query. Ensure your data source supports this mode before attempting to create a connection.

Direct Query Mode is a powerful tool when you need real-time access to your data, but understanding its benefits, limitations, and how to optimize its performance is crucial for a successful implementation in your Power BI projects.

When to Use Import vs. Direct Query

Regarding Power BI, how you access and interact with your data is not one-size-fits-all. It depends on your specific needs and the nature of your data. In this section, we’ll explore the scenarios that favor two fundamental data access modes: Import Mode and Direct Query Mode. Additionally, we’ll delve into the concept of Hybrid Models, where you can blend the strengths of both modes to create a tailored solution that best fits your data analysis requirements. Whether you seek real-time insights, optimized performance, or a careful balance between data freshness and resource efficiency, this section will guide you toward making the right choice for your unique scenarios.

Scenarios Favoring Import Mode

- Data Exploration and Transformation: Import Mode shines when you clean, shape, and transform your data before creating reports. It allows you to consolidate data from multiple sources, perform calculations, and create a unified data model within Power BI. This is especially valuable when dealing with disparate data sources that require harmonization.

- Offline Accessibility: Importing data into Power BI provides the advantage of working offline. Once you’ve imported the data, you can create, modify, and view reports without needing a live connection to the source. This is crucial for situations where consistent access to data is required, even when the internet connection is unreliable or unavailable.

- Complex Calculations: Import Mode allows you to perform complex calculations, aggregations, and modeling within Power BI. This is advantageous when you need to create advanced KPIs, custom measures, or calculated columns that rely on data from various sources.

- Performance Optimization: You can optimize performance by importing data into Power BI. Since the data resides within Power BI’s internal engine, queries and visualizations respond quickly, providing a smooth user experience, even with large datasets.

- Data Security and Compliance: Import Mode is often favored when data security and compliance are paramount. By controlling access to the imported data, you can protect sensitive information, making it suitable for industries with strict regulatory requirements.

Scenarios Favoring Direct Query Mode

- Real-time Data Analysis: Direct Query Mode is essential when you require up-to-the-minute data insights. It’s perfect for monitoring stock prices, tracking website traffic, or analyzing real-time sensor data. With Direct Query, you see changes as they happen.

- Large and Evolving Datasets: When working with massive datasets that are frequently updated, importing all the data can be impractical or resource-intensive. Direct Query ensures you always work with the most current information without worrying about data refresh schedules or storage limitations.

- Data Source Consistency: In situations where maintaining data source consistency is critical, such as financial reporting or compliance monitoring, Direct Query ensures that your reports reflect the exact state of the source data, avoiding any discrepancies or data staleness.

- Resource Efficiency: Direct Query is resource-efficient since it doesn’t store data internally. This makes it suitable for scenarios where memory or storage constraints are a concern, especially in large enterprises or organizations with limited IT resources.

Hybrid Models: Combining Import and Direct Query

In some cases, the best approach involves combining both Import and Direct Query modes in what is known as a “Hybrid Model.” Here’s when and why you might choose this approach:

- A blend of Historical and Real-time Data: Hybrid models are beneficial when you need a combination of historical data (imported for analysis) and real-time data (accessed through Direct Query). For example, you might import historical sales data while using Direct Query to monitor real-time sales.

- Data Volume Management: You can use Import Mode for the most critical or frequently accessed data and Direct Query for less frequently accessed or rapidly changing data. This way, you strike a balance between performance and data freshness.

- Combining Data Sources: Sometimes, you may need to combine data from sources best suited for different modes. For example, you might import financial data from a spreadsheet (Import Mode) and connect to an external API for real-time market data (Direct Query).

- Optimizing Performance: By strategically choosing where to use Import and Direct Query, you can optimize the overall performance of your Power BI solution. For instance, you can alleviate resource constraints by using Direct Query for the most resource-intensive data sources while leveraging Import Mode for the rest.

Hybrid models provide flexibility and allow you to tailor your Power BI solution to meet your organization’s specific needs, combining the strengths of both Import and Direct Query modes to maximize efficiency and data freshness.

A Comprehensive Overview of Data Refreshes when choosing between Important VS Direct Query.

To navigate this landscape effectively, one must understand the nuances of data access modes. In this section of the “Power BI Comprehensive Guide,” we delve into two pivotal aspects: “Scheduled Refresh in Import Mode” and “Real-time Data in Direct Query Mode.” These elements are the gears that keep your data engine running smoothly, offering distinct advantages for different scenarios.

Scheduled Refresh in Import Mode automates keeping your data up-to-date, ensuring your reports and dashboards reflect the latest information. We’ll explore its benefits, such as automated data updates and historical analysis while considering factors like data source availability and performance impact.

Real-time Data in Direct Query Mode opens a window into the world of instantaneous insights. Discover how this mode allows you to access data as it happens, perfect for scenarios like stock market analysis, web analytics, and IoT data monitoring. However, we’ll also delve into the critical considerations, such as data source performance and query optimization.

Lastly, we’ll examine the critical topic of Data Source Limitations, where not all data sources are created equal. Understanding the compatibility and capabilities of your data sources, especially in the context of Direct Query Mode, is vital for a successful Power BI implementation.

As we navigate these aspects, you’ll gain a deeper understanding of the mechanics that drive data access in Power BI, empowering you to make informed decisions about which mode suits your unique data analysis needs. So, let’s dive into the world of data access modes and uncover the tools you need for data-driven success.

Scheduled Refresh in Import Mode

Scheduled Refresh is critical to working with Import Mode in Power BI. This feature lets you keep your reports and dashboards up-to-date with the latest data from your source systems. Here’s a more detailed explanation:

Scheduled Refresh allows you to define a refresh frequency for your imported data. For example, you can set it to refresh daily, hourly, or even more frequently, depending on the requirements of your reports and the frequency of data updates in your source systems. Power BI will re-query the data sources during each scheduled refresh, retrieve the latest information, and update your datasets.

Scheduled Refresh is beneficial in several scenarios:

- Automated Data Updates: It automates the data retrieval and refresh process, reducing manual efforts. This is particularly useful for large datasets or multiple data sources.

- Timely Insights: Scheduled Refresh ensures that your reports and dashboards always reflect the most current data available. This is essential for data-driven decision-making.

- Historical Analysis: It allows you to maintain a historical record of your data, enabling you to analyze trends, track changes over time, and make informed historical comparisons.

However, it’s essential to consider some key factors when setting up Scheduled Refresh:

- Data Source Availability: Your data sources must be accessible and available during the scheduled refresh times. If the data source becomes unavailable, the refresh process may fail.

- Performance Impact: Frequently scheduled refreshes can strain your data source, so balancing data freshness and performance is essential.

- Data Volume: The size of your dataset and the complexity of data transformations can affect the duration of the refresh process. Optimizing your data model and query performance is crucial.

Real-time Data in Direct Query Mode

In Direct Query Mode, real-time data access is one of its defining features. Here’s a more detailed explanation:

Direct Query Mode lets you connect to data sources in real-time or near-real time. This means that when new data is added or updated in the source system, it becomes immediately available for analysis in your Power BI reports. It’s like having a live feed of your data, and it’s precious in scenarios where timeliness is critical.

Some use cases for real-time data in Direct Query Mode include:

- Stock Market Analysis: Traders and investors rely on up-to-the-second stock price data to make informed decisions.

- Web Analytics: Businesses need real-time insights into website traffic, click-through rates, and user behavior to optimize their online presence.

- IoT Data Monitoring: Industries like manufacturing and healthcare depend on real-time data from IoT sensors to ensure smooth operations and patient safety.

Real-time data in Direct Query Mode comes with considerations

- Data Source Performance: The performance of your data source becomes crucial, as any delays or downtimes in the source system will directly impact the real-time data feed.

- Query Optimization: Queries in Direct Query Mode should be optimized to minimize latency and ensure fast response times.

Data Source Limitations

While Power BI supports a wide range of data sources, it’s essential to be aware of potential limitations, especially in Direct Query Mode. Here’s an overview:

- Data Source Compatibility: Not all data sources are compatible with Direct Query Mode. Some sources might not support real-time access or have limited capabilities when used in this mode. It’s essential to check the documentation and compatibility of your data source with Power BI.

- Complex Transformations: In Direct Query Mode, some complex data transformations possible in Import Mode may not be supported. This can impact your ability to create calculated columns or measures directly within Power BI.

- Performance Considerations: Direct Query Mode’s performance depends heavily on your data source’s performance. Slow or resource-intensive queries on the source side can lead to slower response times in Power BI.

Understanding the limitations and capabilities of your data sources is crucial for making informed decisions when choosing between Import Mode and Direct Query Mode in your Power BI projects.

Performance Considerations Using Import vs Direct Query Power BI

Factors Affecting Import Mode Performance

In import mode, performance considerations are essential for efficient data analysis. The primary factor influencing import mode performance is the size and complexity of your dataset. When dealing with larger datasets, loading data into the local or in-memory cache can become resource-intensive and time-consuming. As the dataset grows, memory usage increases, potentially leading to performance bottlenecks. Additionally, the complexity of data transformations and calculations within the data model can slow down import mode. To mitigate this, data model optimization becomes crucial, ensuring that the model is streamlined and calculations are as efficient as possible. Another factor affecting performance is the hardware resources available. Adequate RAM and CPU power are necessary to support large datasets and complex calculations. Lastly, the frequency of data refreshes should be carefully considered. Frequent refreshes can strain system resources and impact the user experience, so finding the right balance between data freshness and performance is essential.

Factors Affecting Direct Query Mode Performance

Direct Query mode, on the other hand, introduces a different set of performance considerations. This mode connects to the data source in real time, eliminating the need to load data into a local cache. However, the speed and reliability of the data source connection become critical factors. A slow or unreliable connection can lead to delays in query execution, impacting the user experience. Additionally, the complexity of queries plays a significant role in Direct Query mode. Complex queries involving multiple data sources or intricate calculations can result in slower

performance. It’s imperative to optimize your queries to ensure they run efficiently. Furthermore, the performance of Direct Query mode relies heavily on optimizing the data source itself. Proper indexing and tuning of the data source are essential for fast query execution. Lastly, managing concurrency is vital in this mode, as multiple users accessing the same data source concurrently can lead to performance challenges. Therefore, implementing effective concurrency management is necessary to maintain a smooth user experience.

Optimization Tips for Import vs Direct Query Power BI

Several optimization strategies can be employed to enhance the performance of both import and Direct Query modes. First and foremost, data cleansing should be a priority. Cleaning and preprocessing the data before importing or connecting in Direct Query mode can significantly reduce unnecessary data, improving performance. Data compression techniques should also be utilized to reduce data size and optimize memory usage, especially in import mode. Implementing appropriate indexing strategies is crucial in both modes. In Direct Query mode, this ensures that tables in the data source are well-indexed for faster query execution, while in import mode, it helps with data retrieval efficiency. Aggregations can be employed in import mode to precompute summarized data, substantially boosting query performance. Partitioning large datasets is another valuable technique for import mode, as it helps distribute the load and improves data refresh times. Regular performance monitoring is essential to identify and address bottlenecks, ensuring data analysis and reporting remain efficient over time.

Security and Data Sensitivity when Using Import vs Direct Query Power BI

Data Security in Import Mode

Regarding data security in import mode, protecting the data stored in the local cache is paramount. Access control measures should be implemented to restrict data access based on user roles and permissions. This ensures that only authorized individuals can view and interact with sensitive data. Encryption is another critical aspect of data security at rest and in transit. Encrypting the data protects it from unauthorized access or interception during transmission. Furthermore, maintaining audit logs is essential for tracking data access and changes made to the data model. This auditing capability enhances security and aids in compliance and accountability efforts.

Data Security in Direct Query Mode

In Direct Query mode, data security focuses on securing data at the source. Secure authentication methods should be implemented to ensure that only authorized users can access the data source. Proper authorization mechanisms must be in place to control access at the source level, ensuring that users can only retrieve the data they are entitled to view. Additionally, data masking techniques can be employed to restrict the exposure of sensitive information in query results. By implementing data masking, you protect sensitive data from being inadvertently exposed to unauthorized users, maintaining high data security and privacy. Overall, in both import and Direct Query modes, a robust data security strategy is vital to safeguard sensitive information and maintain the trust of users and stakeholders.

Compliance and Privacy Considerations: Import vs Direct Query Power BI

Compliance and privacy considerations are paramount in data analysis and reporting using import or Direct Query modes. Ensuring compliance with regulations such as GDPR and HIPAA is a top priority. This involves controlling data access, implementing encryption measures, and defining data retention policies that align with legal requirements. Data residency is another critical aspect to consider. Determining where your data is stored and transmitted is essential to ensure compliance with regional data residency regulations and restrictions. Data anonymization or pseudonymization should also be part of your compliance strategy to protect individual privacy while still allowing for meaningful analysis. Furthermore, consent management mechanisms should be in place, enabling users to provide explicit consent for data processing and sharing. These considerations collectively form a robust compliance and privacy framework that ensures your data analysis practices adhere to legal and ethical standards.

Data Modeling and Transformation

Data modeling in import mode involves structuring your data to optimize the efficiency of data analysis. One of the critical principles often applied in this mode is the use of a star schema. Data is organized into fact tables and dimension tables in a star schema. Fact tables contain the core business metrics and are surrounded by dimension tables that provide context and attributes related to those metrics. This schema design simplifies query performance, allowing for more straightforward navigation and data aggregation.

Calculated columns play a crucial role in import mode data modeling. By creating calculated columns for frequently used calculations, you can improve query speed. These calculated columns can encompass various calculations, such as aggregations, custom calculations, or even derived dimensions, which simplify and expedite generating insights from your data. Furthermore, defining relationships between tables is essential in import mode to ensure data can be accurately and efficiently navigated. Properly defined relationships enable users to create meaningful reports and visualizations.

Data Modeling in Direct Query Mode

In Direct Query mode, data modeling focuses on optimizing query performance rather than designing data structures in the local cache. Crafting efficient SQL queries is paramount in this mode. Ensuring your queries are well-structured and utilizing database-specific optimizations can significantly impact query response times. Query optimization techniques, such as query folding, are valuable for pushing data transformations back to the data source, reducing the amount of data transferred and processed by the reporting tool.

Additionally, proper indexing of tables in the data source is critical. A well-indexed data source can dramatically improve query execution speed. Indexes enable the database to quickly locate the necessary data, reducing the time it takes to retrieve and process results. Data modeling in Direct Query mode is closely tied to the performance optimization of the underlying data source. Ensuring the data source is well-tuned for query performance is essential for delivering fast and responsive reports.

Differences and Limitations Visualization and Reporting

Building Reports in Import Mode

Building reports in import mode offers several advantages, primarily regarding the complexity and richness of visualizations and dashboards that can be created. Since data is stored locally in a cache, it is readily available for immediate manipulation and visualization. This means you can make interactive and visually appealing reports with various visual elements, including charts, graphs, and complex calculated fields. However, there are limitations to consider. Reports in import mode may suffer from slower refresh times, especially when dealing with large datasets. Additionally, real-time data updates often require scheduled refreshes, resulting in data lag between updates and the availability of new information in reports.

Building Reports in Direct Query Mode

Building reports in Direct Query mode offers real-time data access without the need for data duplication. This model is well-suited for scenarios where up-to-the-minute data is critical. However, the level of complexity in visualizations may be limited compared to import mode. Due to the need for real-time querying and potential performance constraints, some complex visualizations may not be feasible. High-concurrency scenarios can also impact query responsiveness, as multiple users accessing the same data source concurrently may experience delays in query execution.

Deployment and Sharing

Publishing Reports in Import Mode

Publishing reports in import mode is relatively straightforward, as the reports are self-contained with data stored in the local cache. These reports can be published on various platforms and accessed by users without directly connecting to the original data source. Users can interact with these reports offline, which can be advantageous when internet connectivity is limited. However, managing data refresh schedules effectively is essential to ensure that the data in the reports remains up-to-date.

Publishing Reports in Direct Query Mode

Publishing reports in Direct Query mode requires a different approach. These reports are connected to live data sources, and as such, they require access to the data source to provide interactivity. Users must have access to the data source to interact with the reports effectively. This mode’s dependency on data source availability and performance should be considered when publishing reports. Ensuring the data source is maintained correctly and optimized to support the reporting workload is essential.

Sharing Options and Limitations

Sharing options differ between import and Direct Query modes due to their distinct characteristics. Import mode reports are more portable, containing the data within the report file. Users can share these reports independently of the data source, simplifying distribution. In contrast, Direct Query reports have more stringent requirements since they rely on a live connection to the data source. This means that sharing Direct Query reports may involve granting access to the data source or hosting the reports on a platform that provides the necessary data connectivity. These considerations should be factored into your sharing and distribution strategy.

Best Practices: Import vs. Direct Query Power BI

Like most SaaS products that are packed full of optimal or suboptimal decisions that will meet expectations during testing time, and we recommend you begin testing as soon as possible to ensure your system can handle Direct Query or the Import Mode, which has a limit of 8 total schedule windows unless you decide to utilize the PowerBI REST API, we will save that for another blog, and know it’s a good step for batch style refreshes that can be accessed via standard programming languages or data engineering services.

Best Practices for Import Mode

To optimize performance in import mode, several best practices should be followed. First, data models should be optimized for speed and efficiency. This includes using star schemas, calculated columns, and well-defined relationships between tables. Data compression and aggregation techniques should be employed to reduce data size and enhance memory usage. Scheduled data refreshes should be during off-peak hours to minimize user disruption. Monitoring and managing memory usage is essential to prevent performance degradation over time, as large datasets can consume substantial system resources.

Best Practices for Direct Query Mode

In Direct Query mode, query optimization is critical. Craft efficient SQL queries that fully utilize the database’s capabilities and optimizations. Ensure that tables in the data source are appropriately indexed to facilitate fast query execution. Monitoring data source performance is crucial, as it directly impacts the responsiveness of Direct Query reports. Educating users on query performance considerations and best practices can also help mitigate potential issues and ensure a smooth user experience.

Common Pitfalls to Avoid

Common pitfalls must be avoided in Import and Direct Query modes to ensure a successful data analysis and reporting process. Overloading import mode with massive datasets can lead to performance issues, so it’s essential to balance the size of the dataset with available system resources. In Direct Query mode, neglecting to optimize data source indexes can result in slow query performance, harming the user experience. Implementing proper data security and compliance measures in both modes can expose sensitive data and lead to legal and ethical issues. Finally, neglecting performance monitoring and optimization in either mode can result in degraded performance and user dissatisfaction.

Use Cases and Examples

Industry-specific Examples

Data analysis and reporting are critical in decision-making and operations in various industries. For instance, in the retail industry, businesses use data analysis to track sales performance, optimize inventory management, and make data-driven pricing decisions. Data analysis helps monitor patient outcomes, assess treatment efficacy, and improve healthcare delivery. The finance sector relies on data analysis for tracking financial transactions, detecting fraud, and making investment decisions. Each industry has unique challenges and opportunities where data analysis can drive improvements and efficiencies.

Real-world Use Cases

Real-world use cases for data analysis and reporting are diverse and encompass many applications. Sales analytics is an everyday use case involving analyzing sales data by region, product, and time to identify trends and opportunities. Customer engagement analysis helps businesses measure customer satisfaction, engagement, and loyalty, providing insights to enhance the customer experience. Operational efficiency analysis identifies bottlenecks, streamlines processes, and optimizes organization resource allocation. These use cases illustrate how data analysis and reporting can be applied across various domains to improve decision-making and drive positive outcomes.

Conclusion

In conclusion, choosing between import mode and Direct Query mode depends on your specific data analysis and reporting needs and your data environment’s capabilities: performance, security, and compliance considerations.

Here is an excellent place to start inviting others to the conversation and ensure others understand what is happening without extra engineering. Like executives getting LIVE reports versus EXTRACTS, maybe this is where we talk about STREAMING?

All modes offer unique advantages and limitations, and a well-informed decision should align with your organization’s goals and requirements. Staying updated on emerging trends and developments in data analysis tools is essential to adapt to evolving needs and technologies. Practical data analysis and reporting are critical for informed decision-making and success in today’s data-driven world.