Uncomplicate Data

ET1, for humans who hate complex

No ET phone home.

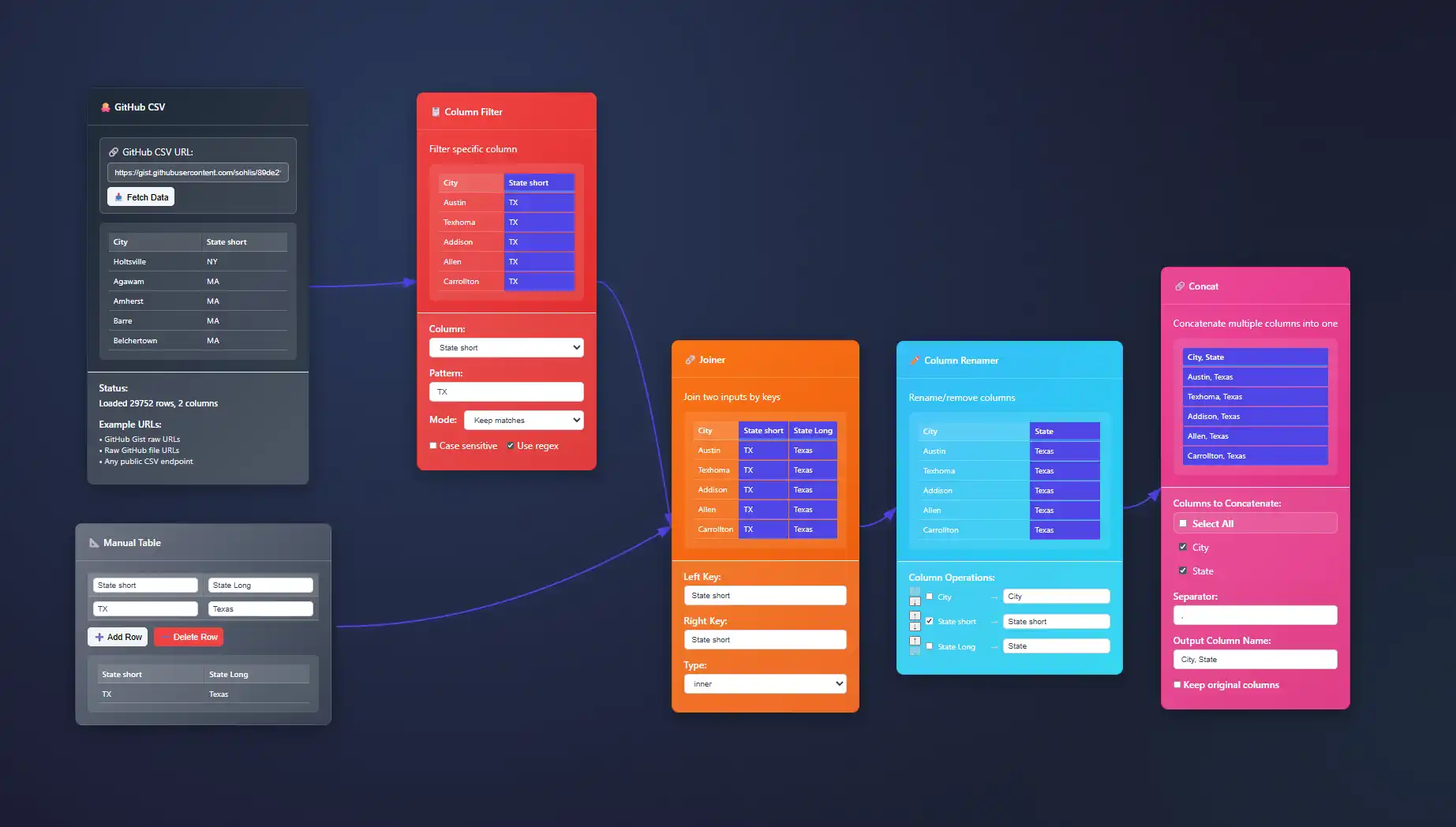

About ET1

ET1 is a visual data workbench that lets you explore, clean, and explain data in-memory. Built for non-technical humans, (but developer friendly) who want to wrangle data, without complexity.

Create

Hands-on ETL

Training Documentation

Use the training material to help you understand more about ET1 and how it helps solve data wrangling problems.

ET1 Basic Training

If you need help getting started, begin here.

ET1 Video Training

Learn the basics, the features, and more.

Future Insight

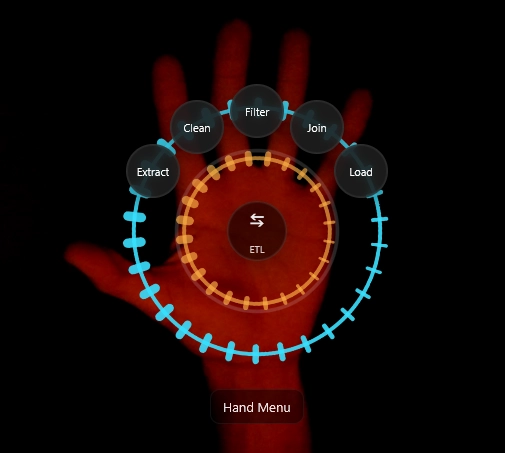

We see the future being focused on adoption, training, and creating Easy Tools for anyone. We are building an emerging technology while also maintaining a creative user experience that is inviting and friendly for all ages.

Inspiration

We are inspired by software, video games, and Sci-Fi movies like The Matrix, Minority Report and Ironman. ET1 is created to be “some-what” similar to other legendary software like Alteryx Desktop, and KNIME Analytics Platform.

Join beta.

Why do you want to access beta?

Optimistic vs. Pessimistic Locking in Data Integration Processes

In today's interconnected business landscape, data drives decisions, powers innovation, and inspires new opportunities. Effective data integration is crucial to ensuring processes run smoothly and insights stay relevant. Yet, even with robust frameworks and advanced...

Pipeline Orchestration: Airflow vs. Prefect vs. Dagster Comparison

In the data-driven world we operate in today, robust and efficient pipeline orchestration is not just a technical luxury—it’s a vital cornerstone of operational excellence. Organizations accumulating massive datasets require intelligent workflows to capture, process,...

Implementing Dead Letter Queues for Failed Data Processing

In today's rapidly evolving data landscape, even the most robust data processing pipelines occasionally encounter failures. Missing or lost data can pose a significant threat to operational efficiency, strategic analytics, and ultimately, competitive advantage....

Converting Batch Pipelines to Stream Processing: Migration Path

Data has become the cornerstone of modern organizations, illuminating crucial insights and accelerating decision-making. As data ecosystems evolve rapidly, businesses reliant on batch processing pipelines are now turning their gaze towards real-time processing...

Payload Compression Strategies in Data Movement Pipelines

In today's rapidly evolving digital landscape, businesses frequently face the challenge of efficiently moving vast volumes of data through their analytics pipelines. As organizations increasingly leverage cloud-based solutions, real-time processing, and integrate...

Idempotent Processing Implementation for Pipeline Reliability

Imagine orchestrating your data pipelines with the confidence of a seasoned conductor leading a symphony—each instrument perfectly synchronized, harmonious, and resilient even under unexpected interruptions. In data engineering, idempotency empowers this confidence by...

Continuous Integration for Data Transformation Logic

In the dynamic landscape of data-driven businesses, speed and accuracy are paramount. Organizations increasingly rely on complex data transformation processes to distill their raw data into actionable insights. But how can teams deliver consistent, reliable data...

Watermark Management in Event-Time Data Processing

In the dynamic landscape of real-time data analytics, precision and timeliness reign supreme. Enterprises consuming vast streams of event-time data face unique challenges: delays, disordered events, and the inevitable reality of continuously arriving information. When...

Building a Data Catalog: Tools and Best Practices

In an age where data is not just abundant, but overwhelming, organizations are increasingly recognizing the value of implementing a reliable data catalog. Much like a digital library, a data catalog streamlines your data landscape, making it coherent and accessible....