Uncomplicate Data

ET1, for humans who hate complex

No ET phone home.

About ET1

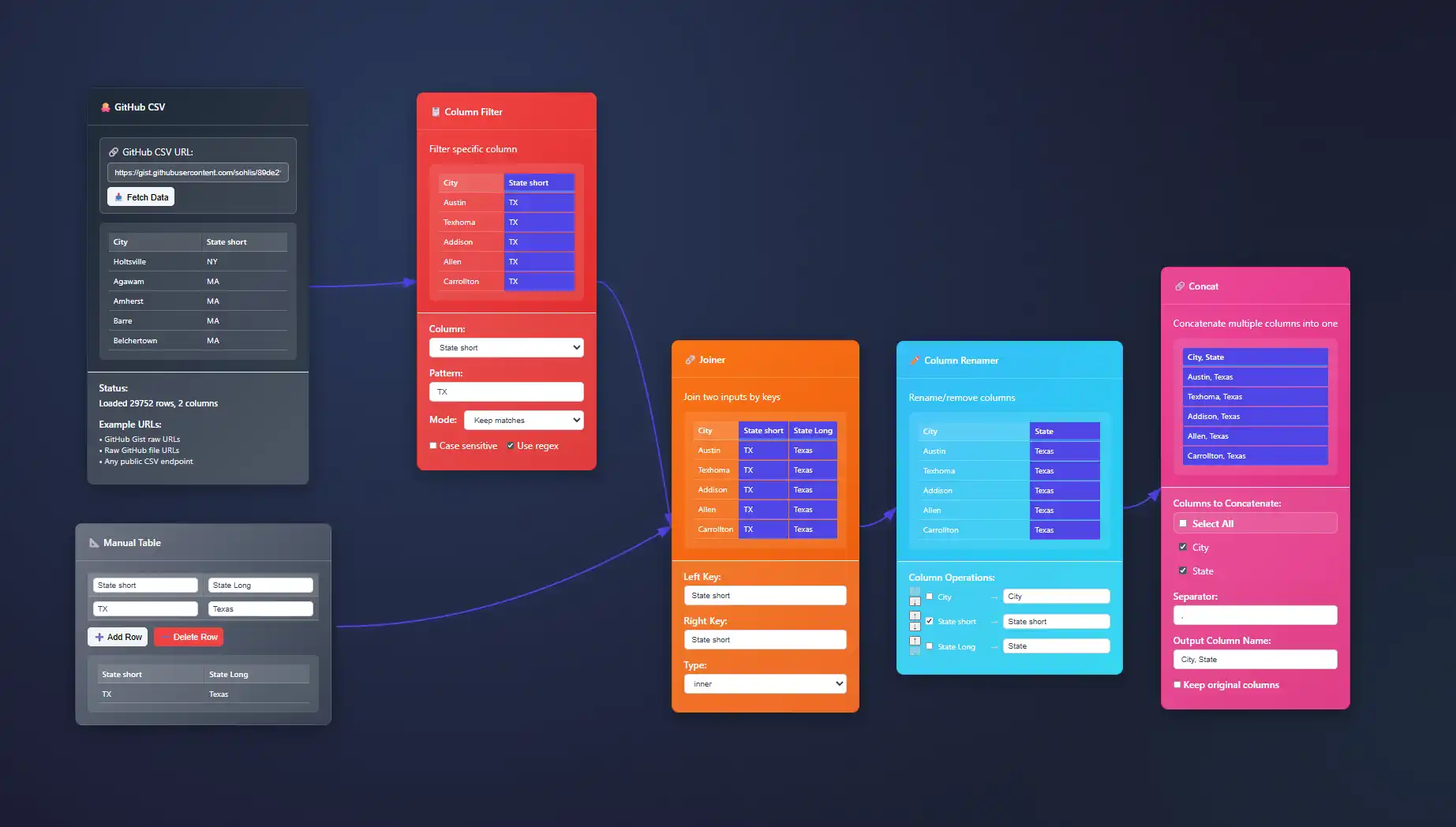

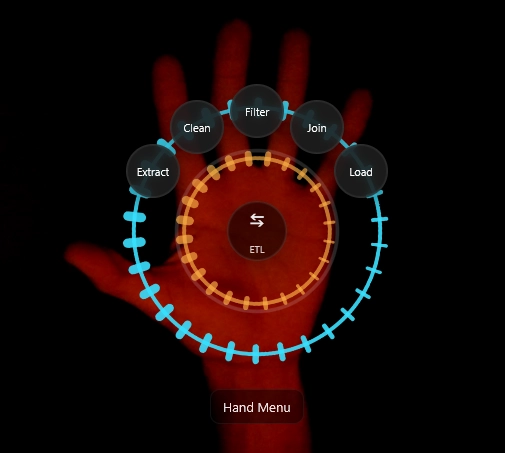

ET1 is a visual data workbench that lets you explore, clean, and explain data in-memory. Built for non-technical humans, (but developer friendly) who want to wrangle data, without complexity.

Create

Hands-on ETL

Training Documentation

Use the training material to help you understand more about ET1 and how it helps solve data wrangling problems.

ET1 Basic Training

If you need help getting started, begin here.

ET1 Video Training

Learn the basics, the features, and more.

Future Insight

We see the future being focused on adoption, training, and creating Easy Tools for anyone. We are building an emerging technology while also maintaining a creative user experience that is inviting and friendly for all ages.

Inspiration

We are inspired by software, video games, and Sci-Fi movies like The Matrix, Minority Report and Ironman. ET1 is created to be “some-what” similar to other legendary software like Alteryx Desktop, and KNIME Analytics Platform.

Join beta.

Why do you want to access beta?

Session Window Implementation for User Activity Analytics

Harnessing user activity data is pivotal for informed decision-making, providing organizations actionable insights into customer behavior, product effectiveness, and strategic optimization opportunities. However, extracting meaningful analysis from continuous,...

Partial Processing Recovery: Resuming Failed Pipeline Steps

In the age of big data, analytics pipelines form the cornerstone of informed and agile strategies for companies aiming to innovate faster and optimize every facet of their operations. However, complicated pipelines running vast amounts of data inevitably encounter...

Feature Flag Implementation for Progressive Data Pipeline Rollout

In today's rapidly evolving data landscape, deploying data pipelines with agility, control, and reduced risk is critical. Feature flags—also known as feature toggles—offer data engineering teams the powerful ability to progressively roll out new features, experiment...

Data Pipeline Canary Deployments: Testing in Production

Imagine rolling out your latest data pipeline update directly into production without breaking a sweat. Sounds risky? Not if you're embracing canary deployments—the strategic practice tech giants like Netflix and Google trust to safely test in real-world conditions....

Tumbling Window vs. Sliding Window Implementation in Stream Processing

In the evolving landscape of real-time data processing, the way organizations utilize data streams can profoundly impact their success. As real-time analytics and data-driven decision-making become the norm, understanding the key differences between tumbling windows...

Snowflake Stored Procedure Optimization for Data Transformation

In an era dominated by data-driven decision-making and rapid data analytics growth, enterprises strategically seek frameworks and platforms enabling robust data transformations with minimal latency and cost. The Snowflake ecosystem stands firmly as one of the leading...

Handling Sensitive Data in ETL Processes: Masking and Tokenization

In an age where data has become the critical backbone fueling innovation, companies grapple daily with the significant responsibility of protecting sensitive information. Particularly within extract-transform-load (ETL) processes, where data is frequently moved,...

Impact Analysis Automation for Data Pipeline Changes

In today's fast-paced data-driven world, decisions are only as good as the data upon which they are based—and that data is only as reliable as the pipelines building and curating its foundations. Business leaders already recognize the immense value of timely, accurate...

Backfill Strategies for Historical Data Processing

Historical data processing can feel like digging into an archaeological expedition. Buried beneath layers of data spanning months—or even years—lies valuable information critical for enhancing strategic decisions, forecasting future trends, and delivering exceptional...