Uncomplicate Data

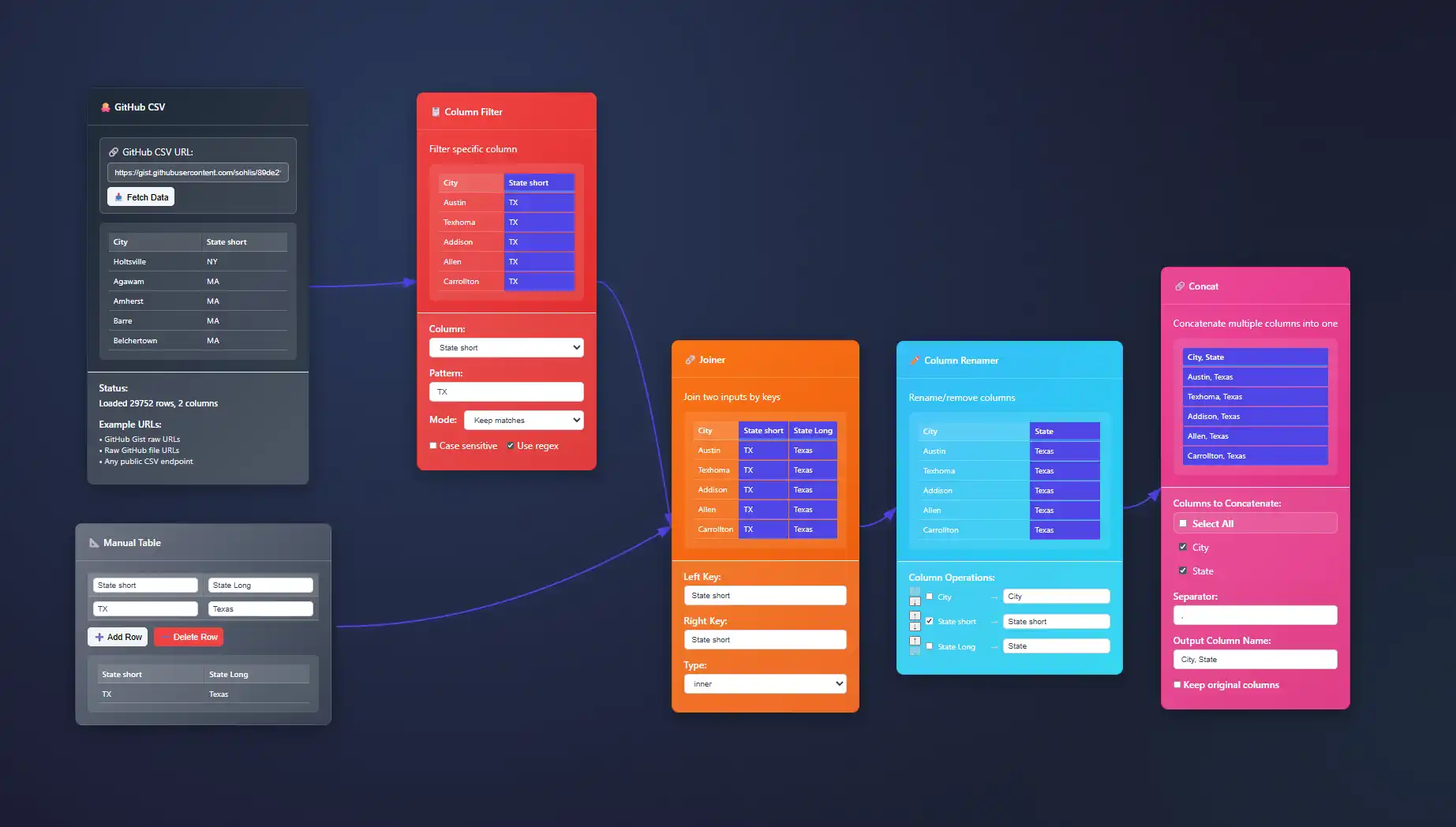

Create end-to-end data analytics solutions in one app that is simple to use, and self-explanatory.

About ET1

ET1 is a visual data workbench that lets you explore, clean, and explain data solutions.

- Access ET1

- Unlock 24 nodes

- Free ETL software

- Desktop friendly

- Code on GitHub iCore utilities, UI pieces, and node definitions live in the public repository so you can review how processing works.

- No storage

- ET1.1 + GitHub OAuth

- Unlock 29 nodes

- Workflows + Neon LakeiNeon Lake is the persistent store for workflow data and results. The free tier has no storage. This tier includes limited storage and 1 branch.

- 100/CU-monthiCU = Compute Unit. An internal measure we use to track processing cost across transforms.

- 500 MB storageiStorage in Neon Lake for tables and results. Retention policies may apply while we tune usage.

- 1 BranchiThis tier includes a single branch only.

- ET1.1 + GitHub OAuth

- Unlock 29 nodes

- Workflows + Neon Lake

- $0.28/CU-houriMetered compute beyond included quota. Priced per compute-hour equivalent derived from CU usage.

- $0.46/GB-monthiNeon Lake storage billed by logical GB-month. We may introduce archival tiers.

- 3 BranchesiEach branch has isolated CPU and storage. You only pay for the delta (the difference) between branches.

- SOC 2 • HIPAA • GDPR

- Regional locations

- User-level pricing

- $0.52/CU-hour

- $0.49/GB-month

- 10 Branches

- Unlimited databases & tables

Training Documentation

Use the training material to help you understand more about ET1 and how it helps solve data wrangling problems.

ET1 Basic Training

If you need help getting started, begin here.

ET1 Video Training

Learn the basics, the features, and more.

Future Insight

We see the future being focused on adoption, training, and creating Easy Tools for anyone. We are building an emerging technology while also maintaining a creative user experience that is inviting and friendly for all ages.

Inspiration

We are inspired by software, video games, and Sci-Fi movies like The Matrix, Minority Report and Ironman.

Join beta.

Why do you want to access beta?

Sparse Datasets: Techniques When Most Values Are Null

Picture a grand library filled with books—but as you open them, you realize most pages are blank. Welcome to the complex yet exciting world of sparse datasets. In today's data-driven world, datasets are enormous, expansive, and, quite frequently, sparse—filled with...

Cold-Start Optimization: Bootstrapping New Pipelines Fast

In the hyper-competitive digital landscape, being first isn't always about having the biggest budget or dedicated research departments; it's about velocity—how quickly your organization can define needs, develop solutions, and deploy into production. Decision-makers...

Custom Serialization Tricks for Ridiculous Speed

Imagine being able to shave substantial processing time and significantly boost performance simply by mastering serialization techniques. In an environment where analytics, big data, and intelligent data processing are foundational to competitive advantage, optimized...

Out-of-Order Events: Taming the Ordering Problem

In the rapidly evolving landscape of data-intensive businesses, event-driven systems reign supreme. Events flow from countless sources—from your mobile app interactions to IoT sensor data—constantly reshaping your digital landscape. But as volumes surge and complexity...

Checkpoints vs Snapshots: Managing State Without Tears

Imagine managing large-scale applications and data environments without ever fearing downtime or data loss—sounds like a dream, doesn't it? As complexity scales, the reliability of your systems hinges on the right strategy for state management. At the intersection of...

The Batch Size Dilemma: Finding Throughput’s Sweet Spot

In today's hyper-paced data environments, organizations face an intricate balancing act: finding the precise batch size that unlocks maximum throughput, optimal resource utilization, and minimal latency. Whether you're streaming real-time analytics, running machine...

Geolocation Workloads: Precision Loss in Coordinate Systems

In an age where precise geospatial data can unlock exponential value—sharpening analytics, streamlining logistics, and forming the backbone of innovative digital solutions—precision loss in coordinate systems may seem small but can lead to large-scale inaccuracies and...

Art of Bucketing: Hash Distribution Strategies That Actually Work

In today's data-driven world, handling massive volumes of information swiftly and accurately has become an indispensable skill for competitive businesses. Yet, not all data distribution methods are created equal. Among the arsenal of techniques used strongly within...

Compression in Motion: Streaming & Working with Zipped Data

In the modern world of rapid digital innovation, effectively handling data is more important than ever. Data flows ceaselessly, driving analytics, strategic decisions, marketing enhancements, and streamlined operations. However, the sheer size and quantity of data...

Features of Today()+1

Available Now()