Uncomplicate Data

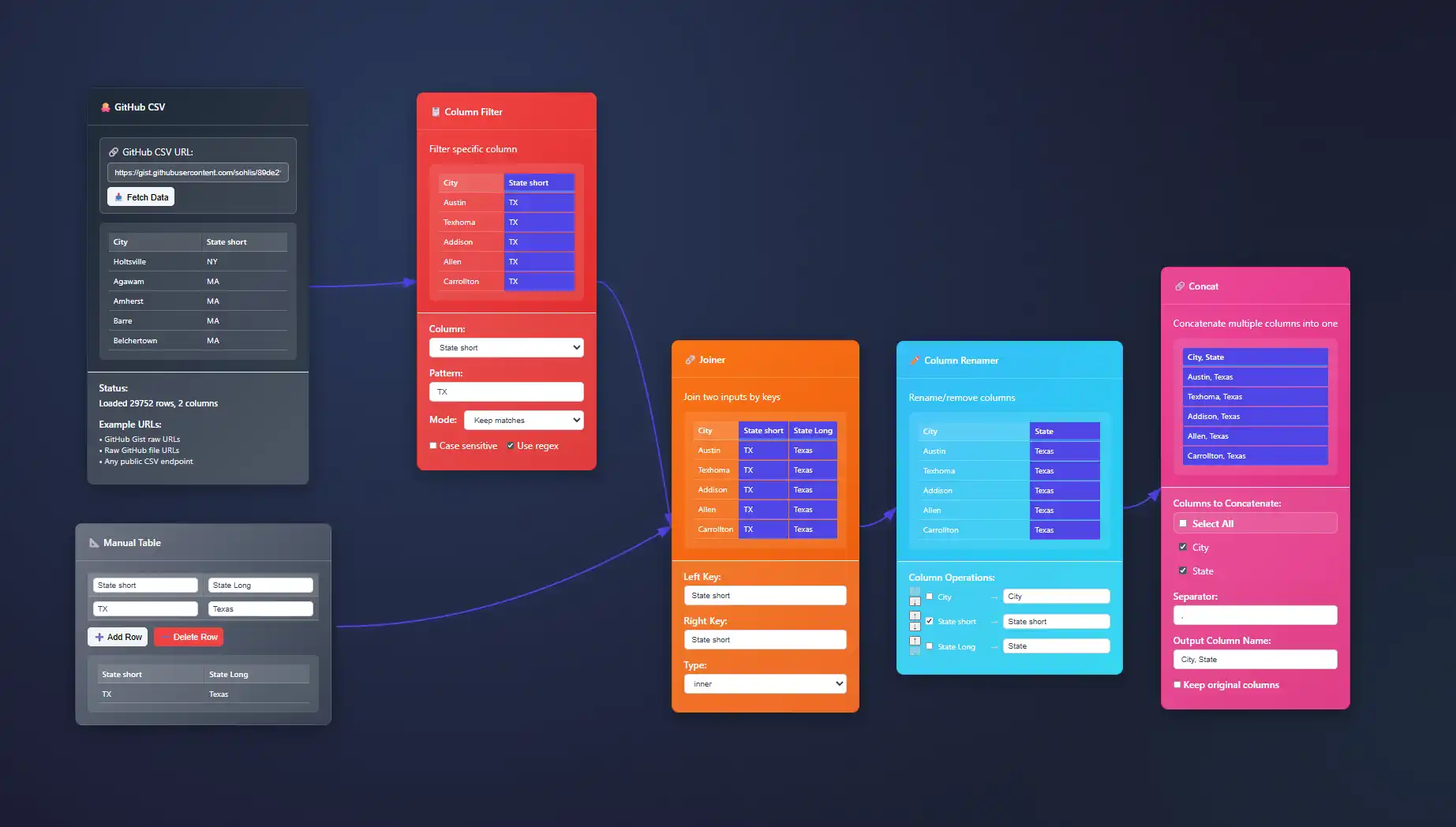

Create end-to-end data analytics solutions in one app that is simple to use, and self-explanatory.

About ET1

ET1 is a visual data workbench that lets you explore, clean, and explain data solutions.

- Access ET1

- Unlock 24 nodes

- Free ETL software

- Desktop friendly

- Code on GitHub iCore utilities, UI pieces, and node definitions live in the public repository so you can review how processing works.

- No storage

- ET1.1 + GitHub OAuth

- Unlock 29 nodes

- Workflows + Neon LakeiNeon Lake is the persistent store for workflow data and results. The free tier has no storage. This tier includes limited storage and 1 branch.

- 100/CU-monthiCU = Compute Unit. An internal measure we use to track processing cost across transforms.

- 500 MB storageiStorage in Neon Lake for tables and results. Retention policies may apply while we tune usage.

- 1 BranchiThis tier includes a single branch only.

- ET1.1 + GitHub OAuth

- Unlock 29 nodes

- Workflows + Neon Lake

- $0.28/CU-houriMetered compute beyond included quota. Priced per compute-hour equivalent derived from CU usage.

- $0.46/GB-monthiNeon Lake storage billed by logical GB-month. We may introduce archival tiers.

- 3 BranchesiEach branch has isolated CPU and storage. You only pay for the delta (the difference) between branches.

- SOC 2 • HIPAA • GDPR

- Regional locations

- User-level pricing

- $0.52/CU-hour

- $0.49/GB-month

- 10 Branches

- Unlimited databases & tables

Training Documentation

Use the training material to help you understand more about ET1 and how it helps solve data wrangling problems.

ET1 Basic Training

If you need help getting started, begin here.

ET1 Video Training

Learn the basics, the features, and more.

Future Insight

We see the future being focused on adoption, training, and creating Easy Tools for anyone. We are building an emerging technology while also maintaining a creative user experience that is inviting and friendly for all ages.

Inspiration

We are inspired by software, video games, and Sci-Fi movies like The Matrix, Minority Report and Ironman.

Join beta.

Why do you want to access beta?

Memento Pattern: Snapshots for State Restoration

Managing complex data systems requires a robust mechanism not only for current operations but to restore historical states quickly and elegantly. Businesses consistently demand reliability in data management, analytics accuracy, and seamless innovation adoption. One...

Cardinality Estimation: Counting Uniques Efficiently

In today's rapidly growing data landscape, understanding the scale and uniqueness of your data points can often feel like counting the stars in the night sky—seemingly endless, complex, and resource-intensive. Businesses navigating petabytes of diverse data cannot...

Chain of Responsibility: Flowing Errors Downstream

Imagine you're building a data pipeline, intricately crafting each phase to streamline business intelligence insights. Your analytics stack is primed, structured precisely to answer the questions driving strategic decisions. But amid the deluge of information...

Approximations vs Accuracy: Speeding Up Heavy Jobs

In today's data-driven world, businesses chase perfection, desiring pinpoint accuracy in every computation and insight. However, encountering large-scale datasets and massive workloads often reminds us of an inconvenient truth—absolute accuracy can be costly in terms...

Visitor Pattern: Traversing Complex Schemas

In the fast-paced era of digital transformation, organizations are inundated with vast amounts of data whose structures continually evolve, often becoming increasingly complex. Technological decision-makers frequently face the challenge of efficiently navigating and...

Quantiles at Scale: Percentiles Without Full Sorts

In today's data-driven landscape, quantiles and percentiles serve as integral tools for summarizing large datasets. Reliability, efficiency, and performance are paramount, but when data reaches petabyte scale, calculating these statistical benchmarks becomes...

Template Method: Standardizing Workflow Blueprints

In today's fast-paced technology landscape, businesses face unprecedented complexities, rapid evolutions, and increasingly ambitious goals. Decision-makers recognize the critical need to standardize processes to maintain clarity, drive efficiency, and encourage...

Fingerprints & Checksums: Ensuring Data Integrity

In an age dominated by radical digital innovation, safeguarding your organization's critical data has become more crucial than ever. Data integrity forms the bedrock of reliable analytics, strategic planning, and competitive advantage in a marketplace that demands...

Builder Pattern: Crafting Complex Transformations

The software world rarely provides one-size-fits-all solutions, especially when you're dealing with data, analytics, and innovation. As projects evolve and systems become increasingly complex, merely writing more lines of code isn't the solution; clarity, modularity,...

Features of Today()+1

Available Now()